LinkTransformer

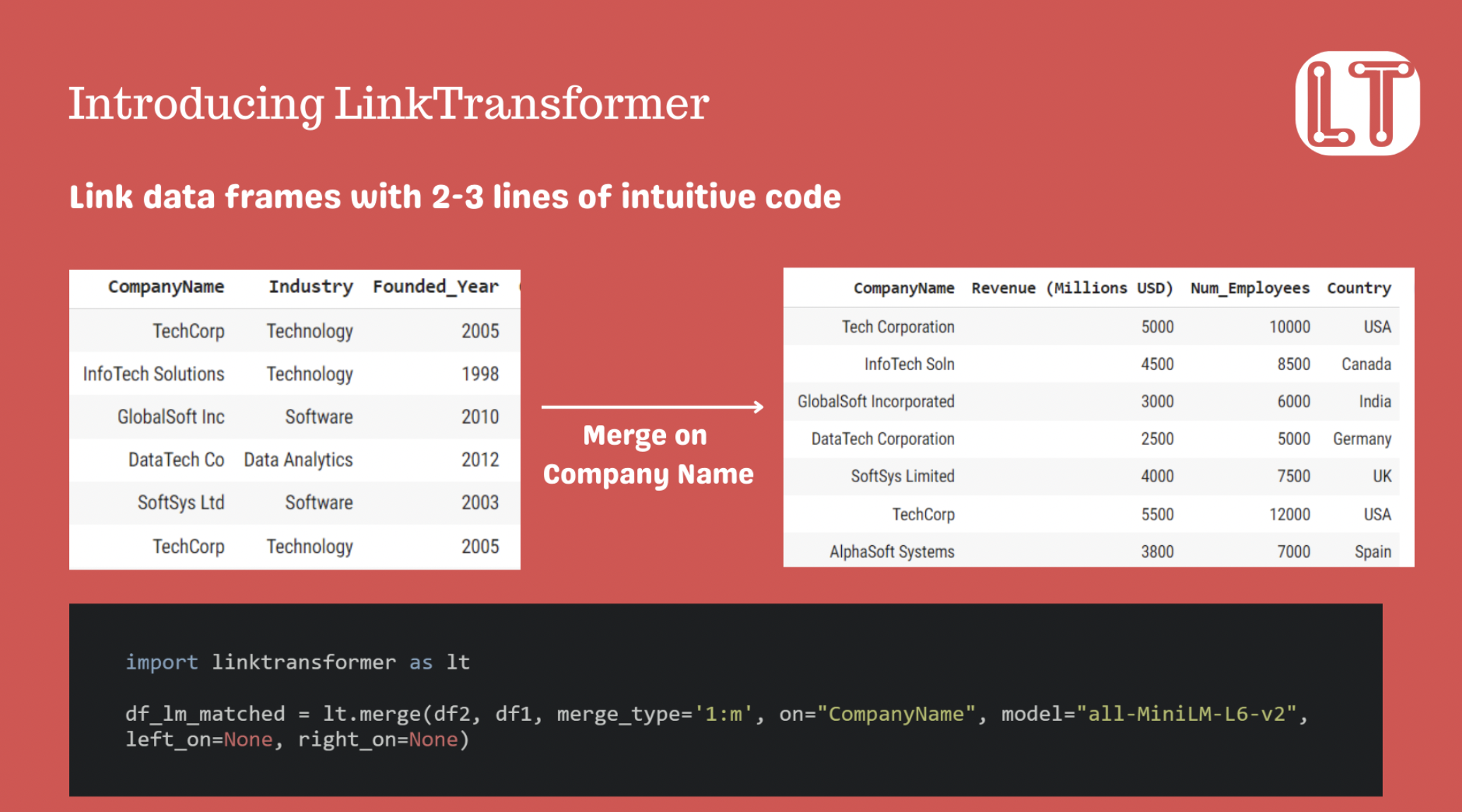

Introducing LinkTransformer: LinkTransformer brings the advantages of AI to standard data frame manipulation tasks like merges, deduplication, and clustering, making it easy to use large language models in a standard data wrangling workflow.

Merge with transformer language models like you would in Pandas. The API is designed to be as simple as possible and very familiar to practitioners coming from other environments like R and Stata.

LinkTransformer supports all models on the Hugging Face Hub and OpenAI Embedding models. We’ve also trained our own collection of over 20 open-source language models for different languages and tasks. A guide to selecting models is here

LinkTransformer supports a wide range of data wrangling tasks with transformers: standard merging, merge with blocking or multiple keys, cross-lingual merges (no need to translate), 1-m and m-m merges, aggregation/classification, clustering and de-duplication. Examples

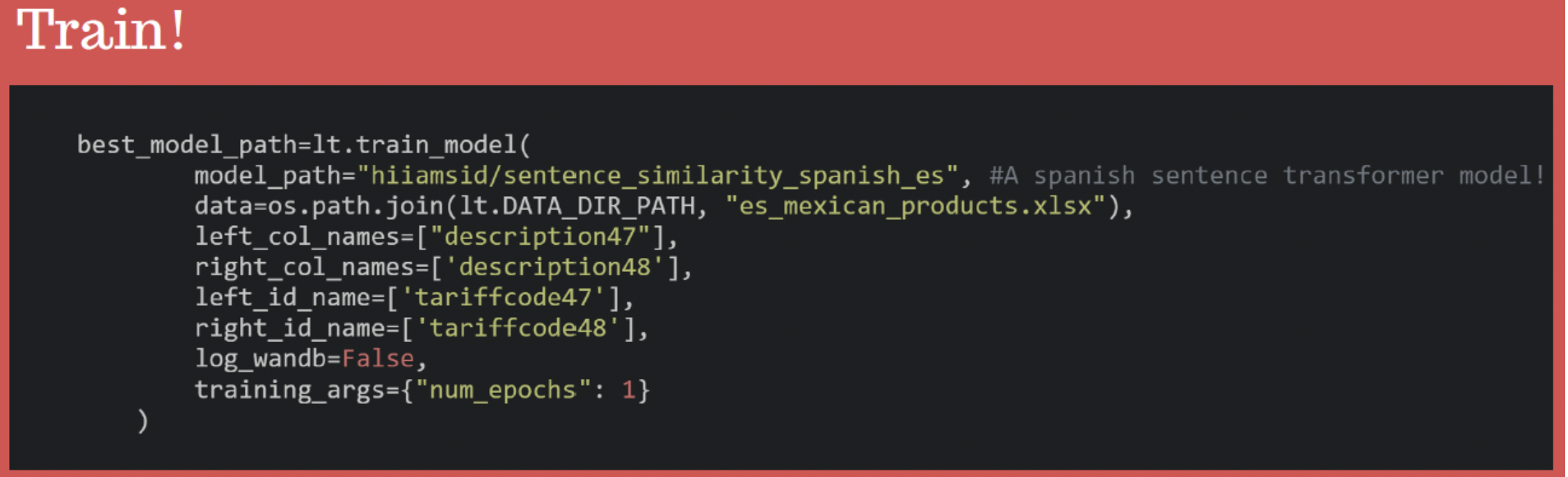

Training your own models is as easy as one line of code, with most of the heavy lifting done behind the scenes. You can fine-tune any pretrained model from Hugging Face. Learn more at our repo and demo notebook

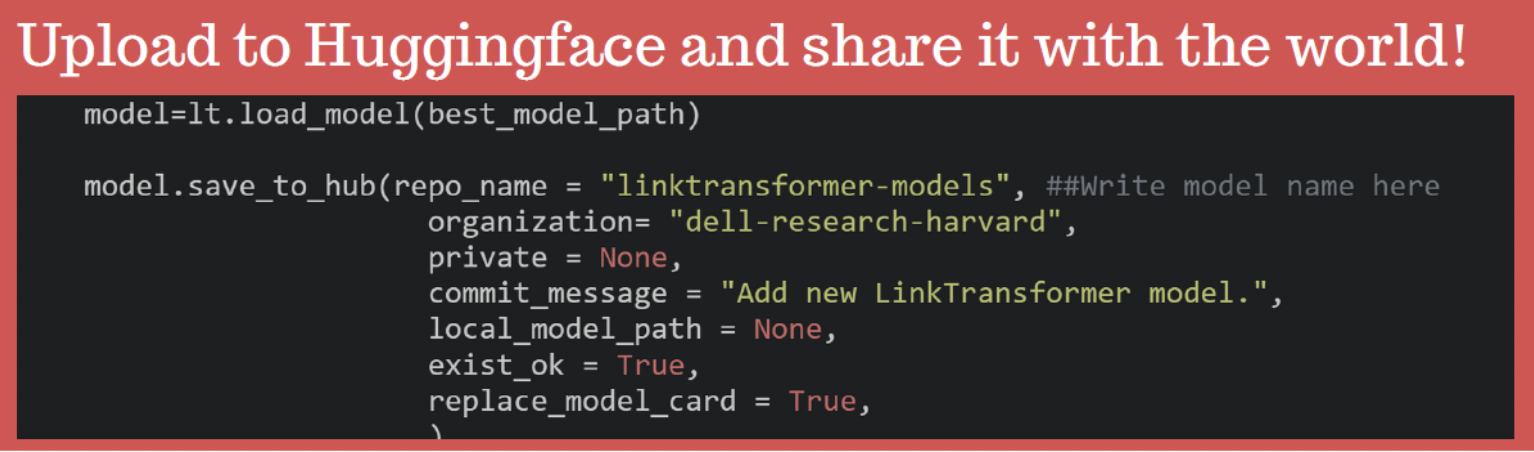

LinkTransformer aims to create a community for deep record linkage, streamlining the distribution of record linkage models and promoting the reusability and reproducibility of pipelines. Users can tag and share their models on the Hugging Face hub with a single line of code.

This is our initial release, and we welcome feedback via Github. Planned features for the next release include integrating vision transformer models for visual record linkage (forgo OCR altogether!) and FAISS GPU support.

If you find LinkTransformer useful, please cite it and consider starring our repo repo. We funded LT out of the PI’s very limited unrestricted funds, and to maintain/expand we need to show potential funders that it is having a positive impact on the community!