More on Transformer Language Models

Topics

This post covers the fifth lecture in the course: “More on Transformer Language Models.”

This lecture will continue our discussion of transformer language models, focusing on interpretation and visualization of textual embeddings and the challenges – and interesting questions – raised by evolving language.

Lecture Video

Part 1

Part 2

Lecture notes 1 Lecture notes 2

References Cited in Lecture 5: More on Transformer Language Models

Academic Papers

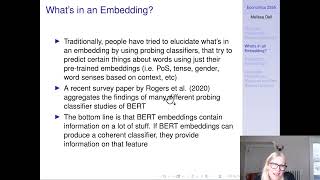

Interpreting Textual Embeddings

-

McInnes, Leland, John Healy, and James Melville. “Umap: Uniform manifold approximation and projection for dimension reduction.” arXiv preprint arXiv:1802.03426 (2018).

-

Köhn, Arne. “What’s in an embedding? Analyzing word embeddings through multilingual evaluation.” In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, pp. 2067-2073. 2015.

-

Rogers, Anna, Olga Kovaleva, and Anna Rumshisky. “A primer in bertology: What we know about how bert works.” Transactions of the Association for Computational Linguistics 8 (2020): 842-866.

-

Wiedemann, Gregor, Steffen Remus, Avi Chawla, and Chris Biemann. “Does BERT make any sense? Interpretable word sense disambiguation with contextualized embeddings.” arXiv preprint arXiv:1909.10430 (2019).

-

Coenen, Andy, Emily Reif, Ann Yuan, Been Kim, Adam Pearce, Fernanda Viégas, and Martin Wattenberg. “Visualizing and measuring the geometry of bert.” arXiv preprint arXiv:1906.02715 (2019).

-

Ethayarajh, Kawin. “How contextual are contextualized word representations? comparing the geometry of BERT, ELMo, and GPT-2 embeddings.” arXiv preprint arXiv:1909.00512 (2019).

-

Merchant, Amil, Elahe Rahimtoroghi, Ellie Pavlick, and Ian Tenney. “What Happens to BERT Embeddings During Fine-tuning?” arXiv preprint arXiv:2004.14448 (2020).

Changing Language

-

Manjavacas, Enrique, and Lauren Fonteyn. “Macberth: Development and evaluation of a historically pre-trained language model for english (1450-1950).” In Proceedings of the Workshop on Natural Language Processing for Digital Humanities, pp. 23-36. 2021.

-

Amba Hombaiah, Spurthi, Tao Chen, Mingyang Zhang, Michael Bendersky, and Marc Najork. “Dynamic language models for continuously evolving content.” In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, pp. 2514-2524. 2021.

-

Manjavacas, Enrique, and Lauren Fonteyn. “Adapting vs Pre-training Language Models for Historical Languages.” (2022).

-

Soni, Sandeep, David Bamman, and Jacob Eisenstein. “Predicting Long-Term Citations from Short-Term Linguistic Influence.” Findings of the Association for Computational Linguistics: EMNLP 2022 (2022).

Other Resources

- Tensorflow Embedding Projector: http://projector.tensorflow.org

Code Bases

Historical Language Models

- Huggingface open source library with large variety of NLP models. Includes MacBERTh and several other historically trained or finetuned language models

Image Source: Devlin, J., Chang, M., Lee, K., Toutanova, K. (2018) BERT: Pre-training of Deep Bidirectional Transformers for Lanaugage Understanding